In our quest for memory safety, Microsoft spends a lot of time researching and looking into potential solutions. The task of safeguarding legacy code is crucial because the vast majority of existing codebases are written in dangerous programming languages.

Compared to purely software solutions, hardware solutions are more appealing because they offer very strong security features at a lower cost. We strongly advise you to read the extensive research on MTE and CHERI that has been published. The blog post” Survey of security mitigations and architectures, December 2022” contains a fantastic overview and numerous references to publications by various parties.

IoT and embedded ecosystems present particular difficulties. Unfortunately, due to actual or perceived overheads, these ecosystems are based on a wide range of C/C++ ( mostly C ) codebases with no mitigations in place. This indicates that many memory safety flaws are simple to take advantage of. Existing hardware also frequently offers no isolation at all. When it does, it frequently only has two to four privilege levels and a few isolated memory regions, making it difficult to achieve object-granularity memory safety.

What’s the smallest variety of CHERI? was the title of our new blog post for these spaces that we ( MSR, MSRC, and Azure Silicon ) published in November 2022. The goal is to create a CHERI-based microcontroller that investigates whether or how, if we’re willing to co-design the instruction set architecture ( ISA ), application binary interface ( ABI ), isolation model, and the foundational components of the software stack, we can obtain very strong security guarantees. The outcomes are fantastic, and we go into detail about how our microcontroller maintains the following security features in this blog post:

- CHERI-ISA capabilities are used in determined mitigation for spatial safety.

- deterministic mitigation for temporal safety in heap and cross-compartment stacks (using a load barrier, zeroing, revocation, and one-bit information flow control scheme )

- Fine-grained compartmentalization (using a small monitor and additional CHERI-ISA features )

Early in February 2023, we made the hardware and software stack available on GitHub along with our tech report,” CHERIoT: Rethinking Security for Low-Cost Embedded Systems.” A clean- slate privilege-separated embedded operating system, a reference implementation of the ISA built on the Ibex core from lowRISC, and an executable formal specification are all included in this release.

This blog post aims to make this platform available to security researchers so they can directly experiment with our project and get their hands dirty with it. Everyone is encouraged to participate in, contribute to, and find bugs.

CHERIoT: Getting Started

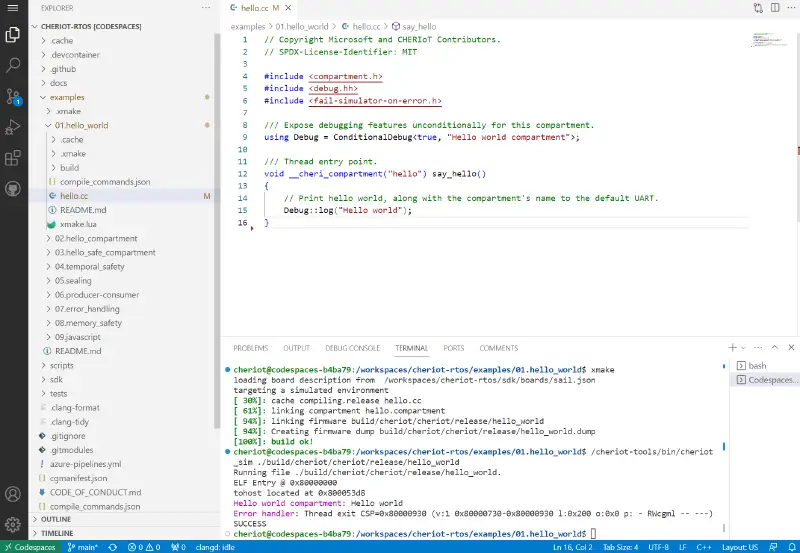

An overview of how to create and install each dependency is given in the Getting Started Guide. Using the dev container we offer, local Visual Studio Code, or GitHub Codespaces, you can avoid the majority of these steps.

It’s very easy, quick, and easy to use GitHub Codespaces. Create a new Codespace, select the microsoft/cheriot-rtos repository, and you’re done. All dependencies will be preinstalled in the IDE you receive in your browser. From this point forward, you can launch Codespaces from any location whenever you want to use them for the project.

This offers a terminal for creating the components and running the simulator created from the official ISA model, allowing you to navigate the code with syntax highlighting and autocompletion configured to work with the CHERIoT language extensions.

To run the examples, simply enter the directory for each one, run xmake to build it, and then pass the resulting firmware image to /cheriot-tools/bin/cheriot_sim:

The cheriot_sim tool supports instruction-level tracing. If you pass -v, it will print every instruction that’s executed, letting you see exactly what’s happening in your code.

You can, of course, work in your preferred environment locally as well. We offer a pre-installed dependencies dev container image. It should be used by default in VS Code and other editors that support the Development Containers specification. The dependencies can be created by you as well. Please raise a PR or file an issue if our recommendations do n’t apply to your preferred environment.

model of threat

We offer comprehensive defense and strong guarantees between compartments, making it challenging to compromise any one compartment. These will be covered separately.

Intracompartment

What’s the smallest variety of CHERI? is the topic of our blog. For each memory safety feature that the compiler and the runtime environment can enforce using CHERI, we provided the low level details:

- spatial safety stack.

- objects with static storage duration are protected spatially.

- Pointers ‘ spatial safety entered the compartment.

- Pointers ‘ temporary safety was introduced into the compartment.

- a great deal of spatial safety

- a great deal of temporal safety

- Integrity of the pointer ( pointers cannot be faked or corrupted )

The first two of these properties must be enforced by the compiler. As a result, while none of the other properties can be violated by assembly code, these two can.

This enables us to impose extremely strong memory safety on the compartment’s legacy C/C++ ( and buggy-but-not actively malicious assembly ) code. For instance, everything in the network stack, media parsers, and other components that handle unreliable input and object management receives lifetime enforcement and automatic bounds checking.

Any instruction that violates our security regulations will undoubtedly be caught and wo n’t harm anyone. The mitigations we receive are therefore deterministic.

Intercompartment

An isolated, distinct security context exists in each compartment. The object model created by another compartment should n’t be compromised by any compartment. We want to enforce the following security properties on the system because we assume that the compartment will execute arbitrary code, allowing an attacker to have their own compartment and code.

- Integrity: Unless specifically told to do so, an attacker cannot change the state of another compartment.

- Confidentiality: Unless explicitly shared, an attacker cannot access the data from another compartment.

- Accessibility: An attacker cannot stop the advancement of another thread with a similar or higher priority.

Integrity is generally the most crucial of these because a hacker can alter code or data to leak information or obstruct progress. The next most crucial factor is confidentiality. The next most crucial factor is confidentiality because even a straightforward IoT device, like an Internet-enabled lightbulb, could reveal one bit of internal state to an attacker and reveal whether or not the house is occupied.

Situational factors play a role in availability. It may be more significant for safety-critical devices than either of the other two characteristics ( most pacemakers, for example, would prefer that an attacker tamper with their medical records rather than remotely cause a heart attack ). Others may find it minorly inconvenient if a device ( or service on the device ) crashes and needs to be reset, particularly when the boot time is incredibly brief.

Our threat model currently includes integrity and confidentiality, but availability research is still ongoing. For instance, although a mechanism exists that enables compartments to identify and repair flaws, it is not yet universally used. Therefore, before anyone considers using the platform for safety-critical tasks, any bug that violates confidentiality or integrity must be fixed.

mistrust between people

Mutual mistrust is the foundation of our system. This is a little startling in the case of some core components. Users of memory allocators, for instance, trust that it wo n’t distribute the same memory to multiple callers while still having access to all of the heap memory. However, calling the memory allocator prevents it from accessing any non-heap memory in your compartment. Similar to this, the scheduler time-slices the core between a group of threads, but none of them can rely on it for confidentiality or integrity because it only has an opaque handle that cannot be dereferenced, allowing it to select which thread runs next but not access the associated state. The majority of operating systems, however, are based on a hierarchical distrust ring model, which allows guest kernels and hypervisors to view all state owned by the processes they run.

Gaining total control in our mutual-trust model necessitates compromising several compartments. You can try to attack threads that rely on scheduler services for things like locking, for example, but you ca n’t access all of them if you compromise the scheduledr. You can breach heap memory safety and attack heap-dependent compartments by compromising the memory allocator.

Examples

Let’s try removing some of the checks and see what exploitation primitives we get in order to see how some guarantees are achieved.

First example: ( Try ) attacking the scheduler

Let’s examine the intriguing explanations for why system availability is unaffected, for instance, by removing a check in the scheduler-named code path. In our blog post, we stated:

The scheduler does not have access to a thread’s register state because of the sealing operation. Although the scheduler can direct the switcher’s next thread, compartment or thread isolation cannot be violated. The TCB does not care about confidentiality or integrity; it only cares about availability ( it may refuse to run any threads ).

Due to the scheduler’s absence from the TCB due to integrity and confidentiality, any potential bugs there only have an impact on availability.

Please be aware that there is no error handler installed on the scheduler. This is due to the switcher’s ability to forcefully unwind into the calling compartment in the absence of an error handler. Because it always forces the caller to unwind without affecting the availability, integrity, or confidentiality of the system, this eliminates the majority of potential DoS attacks on the scheduler. Before referencing a user-provided pointer that might set off an alarm, the scheduler is designed to make sure that its state is always constant.

The check we are going to remove is an important one. Take a look at the code in heap_allocate_arraysdk/core/allocator/main.cc:

[[cheri::interrupt_state(disabled)]]void*heap_allocate_array(size_tnElements,size_telemSize,Timeout*timeout){...if(__builtin_mul_overflow(nElements,elemSize,&req)){returnnullptr;}...}We have a check for integer overflow on the allocation size, as you can see. Even without this check, the allocation cannot be accessed outside of its boundaries because we are using pure-capabilityCHERI. This is due to the fact that the capability still sets the precise bounds, regardless of the size the heap will allot. We’ll just set off a CHERI exception on the first load/store that exceeds the limits ( cheR I is entertaining, yes )! ).

Let’s see that happen in practice. We will remove the integer overflow check and trigger a call to heap_allocate_array with arguments that overflow and trigger a small allocation (when the caller intends for a much, much bigger one). Normally, such scenarios result in wild copies. But again, with CHERI-ISA, the processor won’t let that happen.

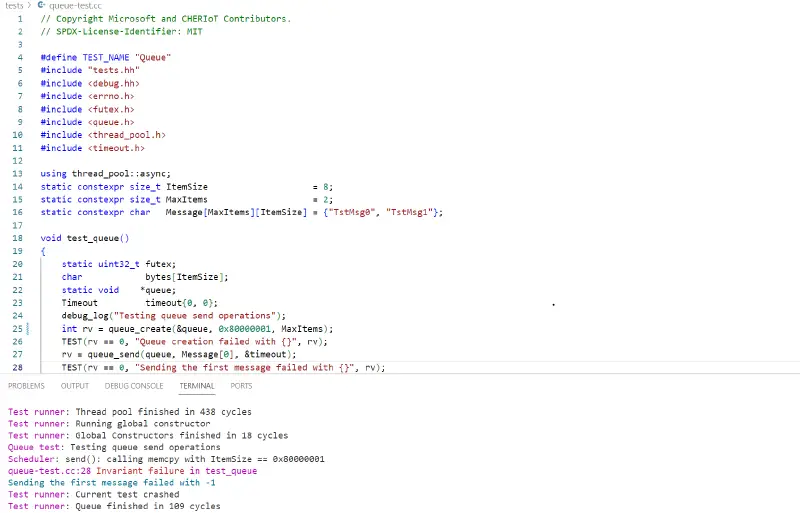

The scheduler (sdk/core/scheduler/main.cc) exposes Queue APIs. One of them is queue_create, which calls calloc with our controlled arguments. And in turn, calloc calls heap_allocate_array. You can look at the producer-consumer example in the project, to see how you can use this API. It’s very simple.

For our example, we made the following changes to trigger the bug from the queue-test under tests:

Change the value passed as itemSize in tests/queue-test.cc:

- int rv = queue_create(&queue, ItemSize, MaxItems);+ int rv = queue_create(&queue, 0x80000001, MaxItems);Remove the sdk/core/allocator/main integer overflow check. cc:

- size_t req;- if (__builtin_mul_overflow(nElements, elemSize, &req))- {- return nullptr;- }+ size_t req = nElements * elemSize;++ //if (__builtin_mul_overflow(nElements, elemSize, &req))+ //{+ // return nullptr;+ //}In sdk/core/scheduler/main.cc (both in queue_recv and queue_send):

- return std::pair{-EINVAL, false};+ //return std::pair{-EINVAL, false};And, just for debugging purposes, add the following debug trace in sdk/core/scheduler/queue.h, in the send function, right before the memcpy:

+ Debug::log("send(): calling memcpy with ItemSize == {}", ItemSize); memcpy(storage.get(), src, ItemSize); // NOLINTThis will allocate a storage of size 2, and fault in the memcpy. Then, we will force unwind to the calling compartment and fail, as you can see here:

We forced unwind from the scheduler to the queue_test compartment, and we see the failure in the return value of queue_send. The scheduler keeps running, and the system’s availability is unaffected.

Breaking Integrity and Confidentiality is Example# 2.

The switcher is the most privileged element, as we stated in our blog post:

” All domain transitions are the responsibility of this.” Because it is in charge of enforcing some of the crucial safeguards that regular compartments rely on, it belongs to the trusted computing base (TCB). It has special access to the trusted stack register (SCR ), which stores the ability to identify a small stack used for tracking cross-comparticipation calls on each thread, thanks to its program counter capability. A pointer to the thread’s register save area is also included in the trusted stack. The switcher is in charge of saving the register state and sending the scheduler a sealed capability to the thread state when using the context switch (either through interrupt or by explicitly yielding ).

The switcher is small enough to be easily audited and only has the state that it has borrowed from the running thread using the trusted stack. It is easy to audit for security because it always operates with interrupts disabled. This is crucial because it might violate thread isolation by failing to seal the pointer to the thread state before sending it to scheduler or by improperly clearing state on compartment transition.

The switcher is the only part that uses access-system registers permission and deals with untrusted data. Our TCB is extremely small because it is slightly larger than 300 RISC-V instructions.

Let’s take one check out of the switcher, for instance, and observe how it introduces a vulnerability that compromises confidentiality, availability, or integrity across the board.

In the switcher, in the beginning of compartment_switcher_entry, we have the following check:

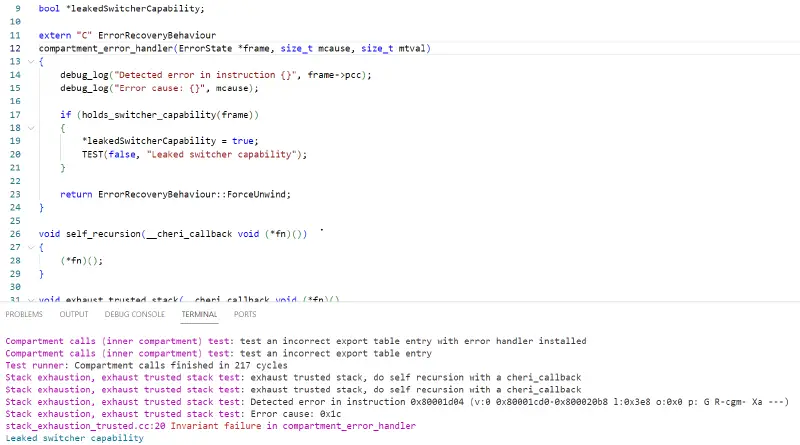

// make sure the trusted stack is still in boundsclhu tp, TrustedStack_offset_frameoffset(ct2)cgetlen t2, ct2bgeu tp, t2, .Lout_of_trusted_stackThis code makes sure the trusted stack is not exhausted and we have enough space to operate on. If not, we jump to a label called out_of_trusted_stack, which triggers a force unwind, which is the correct behavior in such a case. Let’s see what will happen if we remove this check and try to exhaust the trusted stack.

How would one exhaust the trusted stack is the first question to ask. This is actually quite straightforward: all we need to do to use trusted stack memory more and more is keep calling functions across-compartment without going back. We added the test to tests/stack_exhaustion_trusted. Cc accomplishes the following:

- We define a

__cheri_callback(a safe cross-compartment function pointer) in thestack-testcompartment, which calls the entry point of another compartment. Let’s call that compartmentstack_exhaustion_trusted. - The entry point of

stack_exhaustion_trustedgets a function pointer and calls it. - Since the function pointer points to code in

stack-test, the call triggers a cross-compartment call to thestack-testcompartment, which calls again the entry point ofstack_exhaustion_trustedcompartment, and this keeps running in an infinite recursion until the trusted stack is exhausted.

The switcher malfunctions as a result, and we arrive at the fault-handling path, which either unwinds or summons an error handler. The switcher’s fault is the issue in this case, but it is handled as if it were in the calling compartment. A copy of the register state at the fault point is passed by the switcher when it calls the error handler in the compartment. Some features in this register dump should never be divulged outside of the switcher.

Our malicious compartment can install an error handler, catch the exception, and get registers with valid switcher capabilities! In particular, the ct2 register holds a capability to the trusted stack for the current thread, which includes unsealed capabilities to any caller’s code and globals, along with stack capabilities for the ranges of the stack given by other capabilities. Worse, this capability is global and so the faulting compartment may store it in a global or on the heap and then break thread isolation by accessing it from another thread.

The following functions get an ErrorState *frame (the context of the registers the error handler gets) and detect switcher capabilities:

usingnamespaceCHERI;boolis_switcher_capability(void*reg){staticconstexprPermissionSetInvalidPermissions{Permission::StoreLocal,Permission::Global};if(InvalidPermissions.can_derive_from(Capability{reg}.permissions())){returntrue;}returnfalse;}boolholds_switcher_capability(ErrorState*frame){for(autoreg:frame->registers){if(is_switcher_capability(reg)){returntrue;}}returnfalse;}Excellent, we can quickly identify switcher capabilities. We can control the system and violate integrity and confidentiality ( as well as availability, but that’s given ) if we have a legitimate capability to access the switcher compartment.

Now, we must put into practice the logic that will deplete the switcher’s trusted stack and ensure that an exception handler checks the register context for switchers ‘ capabilities.

Exactly this is what stack_exhaustion_trusted does. cc. The code is incredibly straightforward:

bool*leakedSwitcherCapability;extern"C"ErrorRecoveryBehaviourcompartment_error_handler(ErrorState*frame,size_tmcause,size_tmtval){debug_log("Detected error in instruction {}",frame->pcc);debug_log("Error cause: {}",mcause);if(holds_switcher_capability(frame)){*leakedSwitcherCapability=true;TEST(false,"Leaked switcher capability");}returnErrorRecoveryBehaviour::ForceUnwind;}voidself_recursion(__cheri_callbackvoid(*fn)()){(*fn)();}voidexhaust_trusted_stack(__cheri_callbackvoid(*fn)(),bool*outLeakedSwitcherCapability){debug_log("exhaust trusted stack, do self recursion with a cheri_callback");leakedSwitcherCapability=outLeakedSwitcherCapability;self_recursion(fn);}And, in stack-test.cc, we define our __cheri_callback as:

__cheri_callbackvoidtest_trusted_stack_exhaustion(){exhaust_trusted_stack(&test_trusted_stack_exhaustion,&leakedSwitcherCapability);}Let’s execute it! All we need to do is to build the test-suite, by running xmake in the tests directory. And then:

/cheriot-tools/bin/cheriot_sim ./build/cheriot/cheriot/release/test-suite

a summary

Scaling CHERI to small cores is, as you can see, a crucial step for embedded and IoT ecosystems. We strongly encourage you to participate in these efforts and contribute to the GitHub project!

References:

Thanks,

Microsoft Security Response Center Saar Amar

Azure Research’s David Chisnall, Hongyan Xia, Wes Filardo, and Robert Norton

Azure Silicon Engineering &, Solutions, Yucong Tao, Kunyan Liu

Tony Chen, Platform, Azure Edge.

ATT Outage and InvestigationWhich do you need, VPN gratuit or VPN payant?What is the best instantaneous security app?Release notes for NordVPN for Linux

The Biden-Harris Administration announces the first-ever AI safety consortium.(Opens in a new browser tab)(Opens in a new browser tab)