ExecBrief from PinnacleOne: Safe, Secure, and Reliable AI

The PinnacleOne Executive Brief has been re-launched. The P1 ExecBrief offers actionable insights on significant developments spanning geopolitics, cybersecurity, strategic technology, and related policy dynamics. It is designed for corporate executives and senior leadership in roles in risk, strategy, or security.

SentinelOne’s response to the Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, which was issued on October 30, 2023, is summarized in our second post.

To encourage your coworkers to sign up as well, kindly subscribe to read upcoming issues and forward this newsletter to them.

Please feel free to get in touch with us directly at [email , protected ] with any comments or inquiries.

AI that is safe, secure, and trustworthy: Insight Focus

Conversations in all industries are infused with artificial intelligence, which offers risks that analysts are uneasy and promises that innovators cannot resist. SentinelOne has personally observed the growing use of AI by network defenders and attackers to enhance their respective cyber capabilities.

To ensure responsible AI use, the White House issued an Executive Order in October. NIST was given the task of developing AI development guidelines and evaluation capabilities by the EO. SentinelOne responded to their request for information using AI to secure the systems of our clients based on our own knowledge and experience.

Summary

We responded by assessing the effect of new AI technologies on cybersecurity, both offensively and defensively. We anticipate that these technologies will be used more frequently by both network defenders and malicious actors, effectively ushering in the age of AI vs. AI, even though the current effects are still in their infancy.

Federal policy must support research and development initiatives that help American businesses and government organizations keep up with rapidly evolving threats rather than restricting them. We discuss Purple AI, an AI-enabled cybersecurity technology that we created to spur business innovation and stay on the cutting edge of risk.

Then, we shared our observations of AI risk management strategies used by our clients ‘ industries. Here, we list three different stances that businesses with varying levels of risk tolerance, market incentives, and industry considerations are taking. In order to help businesses establish and maintain enterprise-wide AI risk management processes and tools, we also provide a summary of the analytical framework we created.

Finally, we made three specific suggestions that urged NIST to use the Cybersecurity Framework in a similar manner. Our suggestions place a strong emphasis on voluntary, risk-based guidance, industry-specific framework profiles, and common ground truths and lexicon.

AI’s effects on cybersecurity

Both attackers and defenders can use AI systems as force enablers. SentinelOne evaluates how AI is being used by threat actors, both state and non-state groups, to enhance preexisting strategies, tactics, and procedures ( TTPs ) and increase their offensive potency. Our own Purple AI is one of many rapidly emerging industry technologies that use AI to automate detection and response and enhance defensive capabilities.

AI is used by cyberattackers to raise the bar.

Social engineering is now a part of the majority of cyberattacks due to humans ‘ enduring fallibility. Attackers used generative AI to gain the trust of unwitting victims even before ChatGPT. By 2022, two thirds of cybersecurity professionals had reported that deepfakes were a part of attacks they had looked into the year before. In 2019, attackers used AI voice technology to fake the CEO’s voice in order to con another higher-up out of$ 233, 000. Less experienced, opportunistic hackers will be able to carry out sophisticated social engineering attacks that combine text, voice, and video to increase the frequency and scope of access operations thanks to the proliferation of these technologies.

The AI frontier can be fully utilized for advanced cyber operations by highly capable state threat actors, but it will be difficult to identify and attribute these effects. AI may help with malware and exploit development, according to the UK’s National Cyber Security Centre, but in the near future, human expertise will continue to fuel this innovation.

It’s important to note that many applications of AI for vulnerability detection and exploitation might not reveal how AI helped attackers launch attacks.

AI is used in cyber defenses to boost signal and lessen noise.

SentinelOne has gained knowledge from creating our own AI-enabled cyber defense capabilities. We are becoming more aware that alert fatigue can overwhelm analysts, requiring responders to waste time putting together difficult and ambiguous information. Data issues are replacing security issues. In order to help human analysts speed up threat-hunting, analysis, and response, we designed our Purple AI system to take in large amounts of data and use a generative model to use natural language inputs rather than code.

An analyst could use a prompt like” Is my environment infected with SmoothOperator?” or” Do I have any indicators of SmoothOrator on my endpoints” by combining AI’s power for data analytics and conversational chats? to search for a particular threat by name. These tools will then produce outcomes and context-aware insights based on the behavior that was observed and any anomalies that were found in the returned data. The best course of action and suggested follow-up questions are also offered. The analyst can then start one or more actions while continuing the conversation and analysis with just one button click.

Threat hunters and SOC team analysts can use the power of large language models to identify and respond to attacks more quickly and easily thanks to solutions like Purple AI, which is an example of how the cybersecurity industry is incorporating generative AI into these solutions.

Even less-experienced or under-funded security teams can quickly uncover suspicious and malicious behaviors that were previously only possible with highly trained analysts devoting many hours of effort by using natural language conversational prompts and responses. Threat hunters wo n’t need to create manual queries about indicators of compromise thanks to these tools, which will enable them to ask questions about particular, well-known threats and receive prompt responses.

How PinnaceOne Helps AI Risk Management Firms

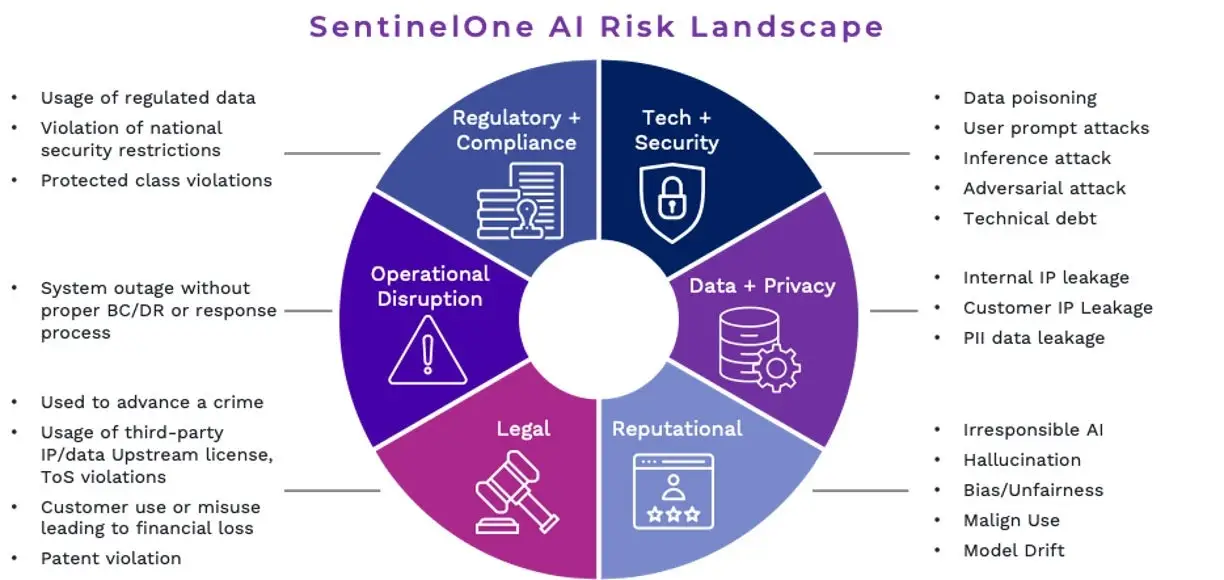

With specific considerations for each, we advise businesses to concentrate on six AI risk management areas:

| Compliance with regulations and amps | ( 4 ) reputable |

| ( 2 ) Security & Technology | Legal ( 5 ) |

| ( 3 ) Privacy, Data & | ( 6 ) Operational Disruption |

We have discovered that organizations are best suited to manage AI integration if they already have efficient cross-functional teams in place to coordinate infosec, legal, and enterprise technology responsibilities. The relationship between AI development engineers, product managers, trust &, safety, and infosec teams, however, presents a common challenge. For instance, among other new AI security challenges, it is unclear in many businesses who is responsible for model poisoning/injection attacks, prompt abuse, or corporate data controls. Additionally, technology planning, security roadmaps, and budgets are hampered by the evolving landscape of third-party platform integration, open-source proliferation, DIY capability sets.

Security and AI

We see common approaches to AI safety and security developing, even though specific tools and technical processes will vary depending on the industry and use case. These strategies ensure that system outputs and performance are reliable, safe, and secure by fusing conventional cybersecurity red teaming methodologies with cutting-edge AI-specific techniques.

Assessments of model fairness/bias, harmful content, and misuse must be included in AI security and safety assurance, which requires a much broader approach than traditional information security practices ( such as penetration testing ). Any AI risk mitigation framework should encourage businesses to implement a comprehensive set of tools and procedures.

- Cybersecurity ( compromise of access, integrity, or confidentiality, for example )

- Model security ( such as extraction attacks, poisoning, evasion, and inference ) and

- bias, abuse, harmful content, and social impact are examples of ethical practice.

This necessitates security procedures that mimic both malicious threat actors and the unintentional leak of sensitive data or problematic outputs by regular users. An AI safety and security team needs a combination of policy/legal experts, AI/ML engineers, and cybersecurity practitioners to effectively accomplish this.

For specialized use cases, particular procedures and tools will be required. To avoid copyright liability, for instance, the creation of synthetic media may necessitate embedded digital watermarking to show traceability and provable provenance of training data.

Additionally, as AI agents gain strength and popularity, a wider range of legal, ethical, and security concerns will be raised about the restrictions placed on their ability to act independently in the real world ( for example, access cloud computing resources, conduct financial transactions, register as businesses, pose as people, etc. ). ).

As great powers compete to gain a competitive advantage, the implications for geopolitical competition and national security will also grow in significance. Working at the cutting edge of these technologies entails inherent risk, and American competitors may be willing to take on more risk in order to outperform them. To stop a race-to-the-bottom competitive dynamic and security spiral, international standard setting and trust-building measures will be required.

Common and broad safeguards, as well as particular best practices and security tools tailored to various industries, will be required to manage and mitigate these risks. These should be determined by the type of use case, operational scope, potential externality scale, control effectiveness, and the cost-benefit ratio between innovation and risk. Maintaining this balance will require constant effort given the rate of change.

Recommendations for policy

Our recommendations to NIST place a strong emphasis on voluntary, risk-based guidance, industry-specific framework profiles, and common ground truths and lexicon.

- To ensure a set of fundamental truths, NIST should align and cross-walk the Cyber Security Framework ( CSF ) and AI Risk Management Framework. To model their cybersecurity, the companies we advise frequently look to industry standards at rival businesses. Companies will be able to comprehend and work toward industry benchmarks that both set the bar high and raise it when they have a common set of terms and standards.

- NIST should create framework profiles for various sectors and subsectors, including profiles ranging from small and medium-sized businesses to large enterprises, using the CSF model. These Framework Profiles would assist businesses in these various industries in aligning their resources, risk tolerance, and organizational goals with the RM F’s core goals.

- Prescriptive regulation is a topic best suited for congressional and executive action, given the larger national security and economic considerations at stake. In keeping with the successful CSF approach, NIST should strive to maximize voluntary adoption of its guidance that addresses the societal impacts and negative externalities from AI that pose the greatest risk.

We were grateful for the chance to discuss AI risk and opportunity with NIST. To keep the United States ‘ cutting-edge edge in AI-enabled cybersecurity and stay ahead of increasingly potent and malicious threats, we encourage our industry peers to maintain close policy engagement.

The Cyber Security JourneyCompliance Standards and Regulations

(Opens in a new browser tab)Russia and China are both prohibited from selling personal information in large numbers under a new executive order.(Opens in a new browser tab)

Compliance Standards and RegulationsNavigating CMMC and DFARS Cyber Security

(Opens in a new browser tab)How to check for and remove viruses on a Chromebook(Opens in a new browser tab)