To actively identify risks in generative artificial intelligence ( AI ) systems, Microsoft has made available the PyRIT ( short for Python Risk Identification Tool ) open access automation framework.

According to Ram Shankar Siva Kumar, head of Microsoft’s AI red team, the tool is intended to “enable every organization across the globe to innovate responsibly with the most recent artificial intelligence advances.”

According to the company, PyRIT can be used to evaluate the robustness of large language model ( LLM) endpoints against various harm categories, including fabrication, hallucination, misuse, bias, and prohibited content.

Additionally, it can be used to spot privacy issues like identity theft as well as security risks like malware generation and jailbreaking.

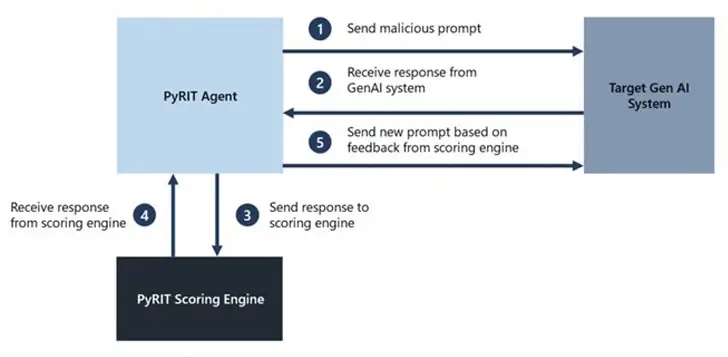

The target, datasets, scoring engine, ability to support multiple attack strategies, and inclusion of a memory component that can store intermediate input and output interactions are all features of PyRIT.

Red team members can use a traditional machine learning classifier or use an LLM endpoint for self-evaluation thanks to the scoring engine’s two available options for scoring the outputs from the target AI system.

According to Microsoft, the objective is to give researchers a baseline for comparing the performance of their model and entire inference pipeline against various harm categories.

” This enables them to have empirical data on the performance of their model today and to identify performance degradation based on future improvements.”

However, the tech behemoth takes care to stress that PyRIT complements a red team’s existing domain expertise rather than replacing manual red teams of generative AI systems.

By creating prompts that can be used to assess the AI system and flag areas that need more investigation, the tool is intended to highlight the risk “hot spots.”

Microsoft also acknowledged that red teaming generative AI systems necessitates simultaneously probing for security and responsible AI risks, and that the exercise is more probabilistic.

Even though it takes time, manual probing is frequently required to find potential blind spots, according to Siva Kumar. Scaling requires automation, but it cannot replace manual probing.

Popular AI supply chain platforms like ClearML, Hugging Face, MLflow, and Triton Inference Server all have multiple critical vulnerabilities that could lead to arbitrary code execution and the disclosure of sensitive data, which is why Protect AI made the development.