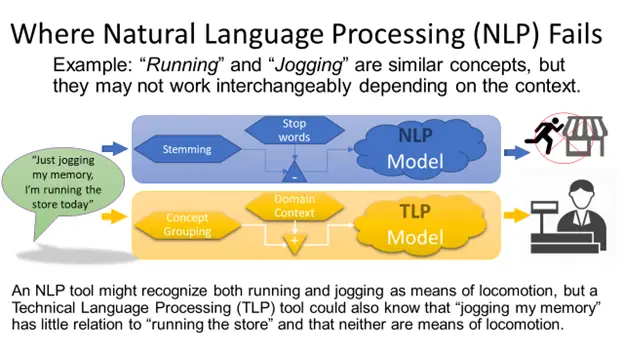

At the 15th Annual Conference of the Prognostics and Health Management ( PHM) Society in Utah, NIST assisted in promoting awareness, discussion, and education at the Technical Language Processing ( TLP ) Tutorial. The NIST , TLP Community of Interest ( COI), ( including TCP COI leadership Rachael Sexton, Engineering Laboratory, and Michael Sharp, Communications Technology Laboratory ), and our external partner, the Logistics Management Institute ( Sarah Lukens ), worked together to produce this event. 200 people attended the tutorial in an effort to raise awareness of TLP industrial applications ‘ dependability and maintenance. The tutorial highlighted the NIST-led resources within the TLP COI and gave an overview of what distinguishes technical language from natural language as well as some fundamental processes and algorithms.

The tutorial was well received, and there was a lot of interest among the attendees in commercial language processing tools. The conference’s panel on the use of large language models ( LLMs) in PHM and several papers on related topics both reflected this interest:

Attendees also talked about the new U.S. Executive Order on AI and the growing role of generative artificial intelligence ( AI ) in industry during the tutorial. The larger PHM community has not yet taken a unified stance on the issues amid uncertainty about how to proceed, despite the fact that many people and some businesses expressed their excitement to be early adopters of LLMs and support the U.S. AI initiative. Some suggested that the uptake initiatives could be accelerated by prioritizing and implementing use cases from stakeholders and top organizations. In order to achieve this, attendees expressed interest in getting advice and a reasonable guarantee of tool quality, dependability, and availability. They also noted that while tools like LLMs are currently free and simple to access, this could change in the future. Let’s not throw out our best practices in engineering just because we have a shiny new toy, one attendee said in anticipation of an alleged suite of new LLM-based tools.

In the LLMs discussions, there was input on changes in the software solution space, in which many businesses are considering how to monetize their platforms as potential tools to address a variety of stakeholder needs. Participants identified end-to-end solutions to link spoken/written words and physical parts as high value targets for the manufacturing and design communities, such as asking LLMs to design a part, integrating it with software and 3D printers, and having locally produced prototype parts. Extracting and processing text from images of technical documents, such as piping and instrumentation diagrams ( P&, IDs ) and failure mode and effect analyses ( FMEAs ), was another high value target that attracted a lot of attention. The development of digital twin models could be sped up and processing incoming logs or requests would be much more effective if text and pictographic relations were captured in a technical specification document.

The slide deck for this event is accessible upon request (email: michael ). nist sharp]at] On the PHM website, you can access both gov ( michael]dot ] sharp]at ] nist]do n’t ) and a recorded version.