A safer systems programming language is required

We talked about the necessity of proactively addressing memory safety issues in our first post of this series. Memory safety concerns have been assigned to a CVE for almost the same percentage of vulnerabilities for more than ten years, despite the fact that tools and guidance are clearly not preventing these vulnerabilities. We believe that by using memory-safe languages, we will be able to lessen this in a way that tools and training have n’t.

In this post, we’ll look at some real-world instances of Microsoft product vulnerabilities that, after testing and static analysis, a memory-safe language might be able to fix.

Safety of Memory

Programming languages that have a clear definition of all memory access have memory safety as one of their properties. Because they use some sort of garbage collection, the majority of programming languages currently in use are memory-safe. Systems-level languages, such as those used to create the underlying systems on which other software is based, include OS kernels, networking stacks. which are typically not memory-safe because they cannot afford a long runtime, like an garbage collector.

Memory safety problems are the primary cause of about 70 % of security vulnerabilities that Microsoft fixes and assigns a CVE ( Common Vulnerabilities and Exposures ), as was mentioned in our previous post. Despite measures like thorough code review, training, static analysis, and others, this is still the case.

Memory safety concerns continue to account for about 70 % of the CVEs that Microsoft assigns each year.

It is obvious that writing memory-safe code at scale using traditional systems-level programming languages is nearly impossible, despite the fact that many seasoned programmers are capable of doing so. This is true regardless of the number of mitigations implemented.

Let’s examine some actual instances of security flaws brought on by language use without a memory safety guarantee.

safety of spatial memory

Making sure that all memory accesses fall within the range of the type being accessed is referred to as spatial memory safety. To do this, code must be used to accurately check all memory operations against these sizes as well as track them.

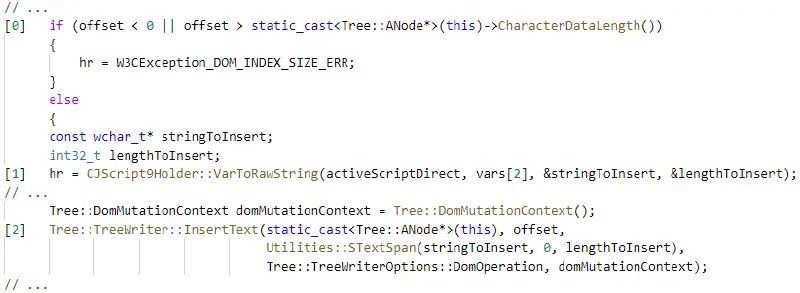

For an edge case of control flow, checks may not exist or may be used incorrectly because they do not take into account the complexity of integer signs, promotions, or overflows. Let’s take a look at this Microsoft Edge example, which Alexandru Pitis discovered ( CVE-2018-8301 ):

Correct is the check at [0]. The size of the string that invalidates the retrieved offset can be changed, though, by [1]. An exploitable out-of-bounds write is produced when the function at [2 ] calls a copy function that is different from the expected offset.

Move the “offset check” closer to the use date to easily fix this vulnerability. The issue is that in complex code bases, this vulnerability is very error-prone, and a straightforward code refactor could rein in this weakness. To enforce bounds-checked array accesses, modern C++ provides span. Unfortunately, since it is not the default, the developer must choose to use it. It is challenging to enforce the use of such constructs in practice.

We programmers would n’t have to worry about correctly implementing these checks, and we could be sure that none of these problems exist in our code, if the language could track and verify sizes for us automatically.

safety of temporary memory

Making sure that pointers continue to indicate a valid memory at the time of dereference is referred to as temporary memory safety.

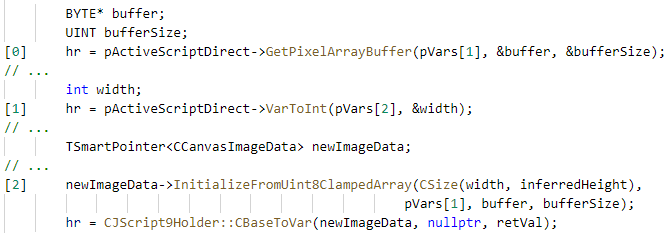

A common use-after-free pattern is created when a local pointer is referred to, an operation is carried out that could free or move the memory, stale the object, and then dereferencing it when it is no longer valid. Take into account the source code example Steven Hunter provided for Edge ( CVE-2017-8596 ):

Because there are so many intricate APIs that interact with one another and the programmer is unable to enforce memory ownership across the entire codebase, this bug is possible. A JavaScript object’s buffer is pointed to by the program at [0]. Then, at [1], it might run more JavaScript code due to the complexity of the language in order to obtain another variable. The contents of that pointer will be used to create a new JavaScript object at [2 ] using the buffer and width.

The issues include:

- The program combines manual memory management and garbage collection. The garbage collector keeps track of JavaScript objects, but it is unable to determine whether they contain a pointer.

- The JS program can change the state and remove the owner of the pointer it aliased at]1 ] because VarToInt returns to JavaScript.

All of the pointers to JavaScript’s internal state could become dangling if the state is changed due to the vulnerability, which is comparable to an iterator invalidationbug. There are numerous ways to resolve this problem. However, in programs as complex as browsers, it is nearly impossible to demonstrate statistically that this error does not recur. Alienation pointers that indicate a mutable state are the source of the issue. The tools necessary to enforce error prevention are not available in C or C++. For tracking memory ownership, it is advised to always use” smart pointers.”

data races

When two or more threads in a single process simultaneously access the same memory location—at least one of them is for writing—without using any special locks to restrict their access—a data race results. Maintaining spatial and temporal memory safety becomes even more challenging and error-prone when taking into account shared data across multi-threaded execution. Another thread may be able to change data that can be used to reference memory during even brief periods of unsynchronized memory sharing. This makes it possible, among other things, for spatial and temporal memory safety vulnerabilities to be triggered by time-of-check vs. use vulnerabilities.

A data race may have an impact, according to Jordan Rabet’s VMSwitch vulnerability, which was revealed at Blackhat 2018. When a virtual machine communicates with the host, this code is known as. As a result, it can be called while other control messages and packets are being processed. This presents a problem because those control message handlers alter the information without locking it.

The following snippet, which is used by a number of control message completion handlers, illustrates the use of the information being updated:

The old opHostData- >, AllocatedRanges]1] values can be used with the new buffer base as a result of this unsynchronized access, resulting in an out-of-bounds write[3].

Locking the data structures that various threads access for the duration of their processing is necessary to prevent these kinds of vulnerabilities. However, in C++, it is difficult to statically enforce checks for these kinds of vulnerabilities.

What We Can Do

It was necessary to take a number of different steps in order to address the problems mentioned above. It is best to use” Modern” C++ constructs like span< and T> whenever possible because they can at least partially prevent memory safety issues. Modern C++ is still not entirely data- and memory-free, though. Furthermore, using such features requires programmers to always “do the right thing,” which may be difficult to enforce in complex and ambiguous codebases. While it may be possible to enforce proper coding practices locally, it can be very challenging to create software components in C or C++ that compose safely due to the lack of good tools for wrapping unsafe code in safe abstractions.

Beyond this, whenever possible, software should eventually switch to a memory-safe language, such as C# or F#, which uses runtime checks and garbage collection to guarantee memory safety. After all, thinking about memory management should only become complicated if necessary.

Consider switching to a memory-safe systems-level programming language if there are good reasons for needing the speed, control, and predictability of an language like C++. The ability of the Rust programming language to write systems-level programs in a memory-safe manner makes it the best option for the industry to adopt whenever possible. We’ll discuss this in our next post.

Principal Cloud Developer Advocate Ryan Levick

Security software engineer Sebastian Fernandez

Developers are urged by White House to switch to memory-safe programming.Why use Rust to program safe systems?

(Opens in a new browser tab)Export Administration Regulations (EAR) and Enforcement by BIS

a proactive strategy for safer code(Opens in a new browser tab)(Opens in a new browser tab)

![{hostData->BufferId = BufferId; [0] hostData->Buffer = Buffer;[1]//hostData->BufferSize = BufferSize;hostData->CtrlMessagesAllocatedBufferSize =BufferSections [NumBufferSections-1]. SubAllocationSize;for (controlAllocIndex = 0;{controlAllocIndex < RNDISDEV_HOST_MAX_PENDING_CONTROL_MESSAGES; ++controlAllocIndex)status = RndisDevHostInternalAllocateSingleSubAllocation( hostData,hostData->CtrlMessages AllocatedBufferSize, &(opHostData->AllocatedRanges), &(opHostData->AllocatedBuffer));}}](https://dodcybersecurityblogs.com/wp-content/uploads/2019/07/wp-content-uploads-2019-07-code3.webp)

![[2] destination = (PVOID) ((UINT_PTR) HostData->Buffer +currentRanges->Range[0].ByteOffset +offsetIntoCurrentRanges);currentBytesToCopy = currentRanges->Range[0].ByteCount-offsetIntoCurrentRanges;if (currentBytesToCopy > bytes LeftToCopy){currentBytesToCopy = bytes LeftToCopy;}[3] RtlCopyMemory (destination,(PVOID) ((UINT_PTR)Buffer + (BufferSize - bytesLeftToCopy)),currentBytesToCopy);](https://dodcybersecurityblogs.com/wp-content/uploads/2019/07/wp-content-uploads-2019-07-code4.webp)